I am a Ph.D. student in the Department of Computer Sciences at the University of Wisconsin–Madison, advised by Prof. Sharon Li, and an incoming intern at Meta Superintelligence Labs. Prior to my Ph.D., I earned my M.S. degree in AI from the University of Seoul, where I was advised by Prof. Kyungwoo Song and Prof. Jiyoung Jung. I also had the opportunity to work with Zhi-Qi Cheng, Alexander Hauptmann, and David Mortensen during a visiting period at Carnegie Mellon University, and with Dongyoon Han and Sangdoo Yun during my internship at NAVER AI Lab.

I am broadly interested in machine learning fundamentals and trustworthy AI. Recently, I have been focusing on understanding and improving the robustness of multimodal LLMs under distribution shifts and uncertainty quantification of LLM agents.

News

Feb. 2026, Will be interning for Meta Superintelligence Labs (PAR Team) this summer at Menlo Park, CA!

Jan. 2026, Start collaboration with Argonne National Laboratory as a Visiting Student-Subcontractor!

Jan. 2026, Three papers got accepted to ICLR 2026 with one Oral presentation!

Selected Publications and Preprints

(* denotes equal contribution)

Refer to the Google Scholar and CV for the full publication list.

Towards Reducible Uncertainty Modeling for Reliable Large Language Model Agents

Changdae Oh, Seongheon Park, To Eun Kim, Jiatong Li, Wendi Li, Samuel Yeh, Xuefeng Du, Hamed Hassani, Paul Bogdan, Dawn Song, Sharon Li

[paper]

arXiv preprint 2026Understanding Language Prior of LVLMs by Contrasting Chain-of-Embedding

Lin Long*, Changdae Oh*, Seongheon Park, Sharon Li

[paper] [code]

ICLR 2026How Do Transformers Learn to Associate Tokens: Gradient Leading Terms Bring Mechanistic Interpretability

Shawn Im, Changdae Oh, Zhen Fang, Sharon Li

[paper] [code]

ICLR 2026 (Oral Presentation)General Exploratory Bonus for Optimistic Exploration in RLHF

Wendi Li, Changdae Oh, Sharon Li

[paper] [code]

ICLR 2026

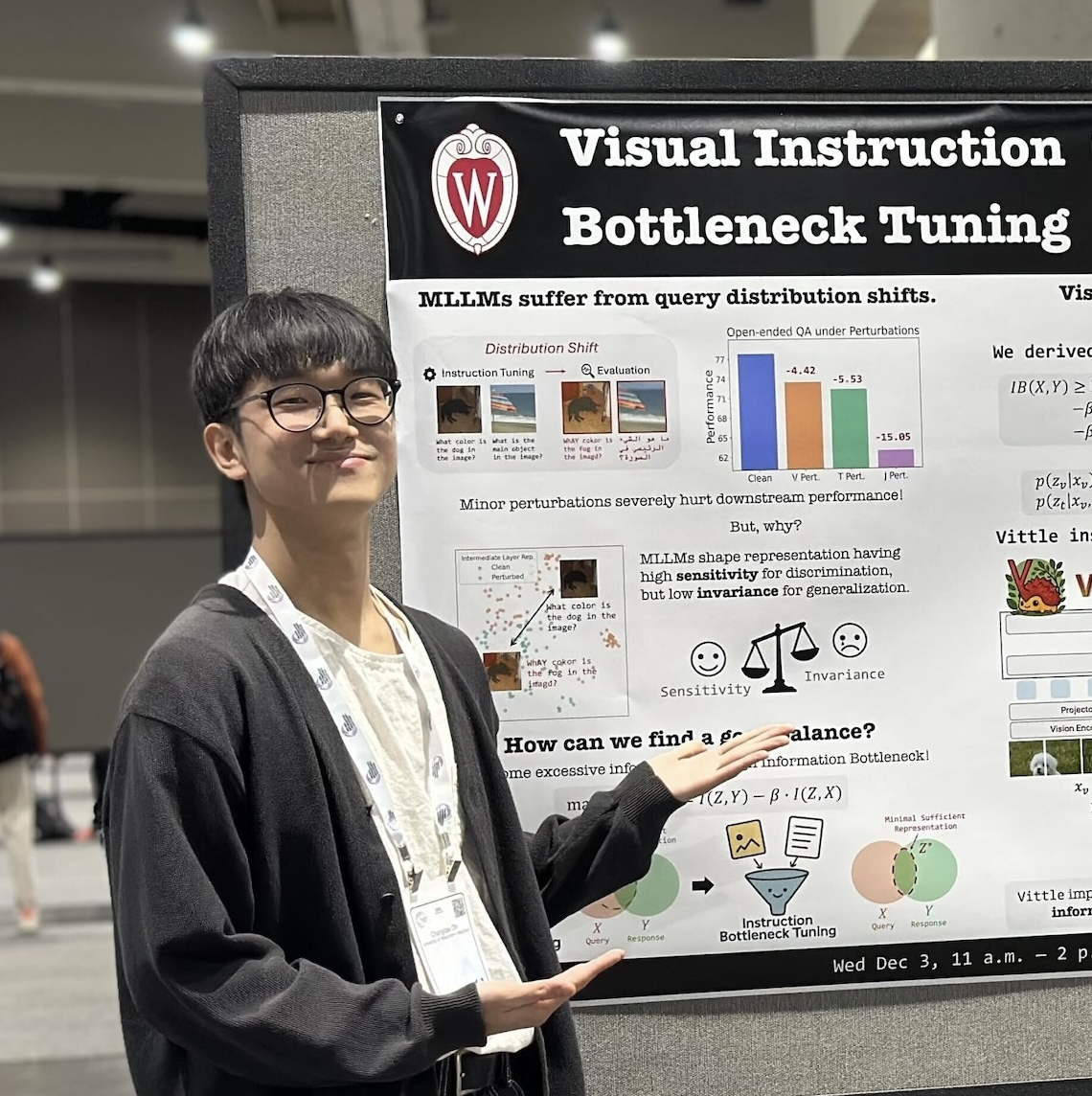

NeurIPS 2025, Workshop on Socially Responsible and Trustworthy Foundation Models (Oral Presentation; 9/136=6.6%)Visual Instruction Bottleneck Tuning

Changdae Oh, Jiatong Li, Shawn Im, Sharon Li

[paper] [code]

NeurIPS 2025

ICML 2025, Workshop on Reliable and Responsible Foundation Models (Oral Presentation; 6/176=3.4%)Understanding Multimodal LLMs Under Distribution Shifts: An Information-Theoretic Approach

Changdae Oh, Zhen Fang, Shawn Im, Xuefeng Du, Yixuan Li

[paper] [code]

ICML 2025

ICLR 2025, QUESTION Workshop (Oral Presentation)DaWin: Training-free Dynamic Weight Interpolation for Robust Adaptation

[paper][code]

Changdae Oh, Yixuan Li, Kyungwoo Song, Sangdoo Yun, Dongyoon Han

ICLR 2025

NeurIPS 2024, Workshop on Adaptive Foundation ModelsTowards Calibrated Robust Fine-Tuning of Vision-Language Models

Changdae Oh*, Hyesu Lim*, Mijoo Kim, Dongyoon Han, Sangdoo Yun, Jaegul Choo, Alexander Hauptmann, Zhi-Qi Cheng, Kyungwoo Song

[paper] [code]

NeurIPS 2024

NeurIPS 2023, Workshop on Distribution Shifts

- Geodesic Multi-Modal Mixup for Robust Fine-tuning

Changdae Oh*, Junhyuk So*, YongTaek Lim, Hoyoon Byun, Minchul Shin, Jong-June Jeon, Kyungwoo Song

[paper] [code]

NeurIPS 2023

BlackVIP: Black-Box Visual Prompting for Robust Transfer Learning

[paper] [code]

Changdae Oh, Hyeji Hwang, Hee-young Lee, YongTaek Lim, Geunyoung Jung, Jiyoung Jung, Hosik Choi, Kyungwoo Song

CVPR 2023Learning Fair Representation via Distributional Contrastive Disentanglement

[paper] [code]

Changdae Oh, Heeji Won, Junhyuk So, Taero Kim, Yewon Kim, Hosik Choi, Kyungwoo Song

KDD 2022

Education

Ph.D. in Computer Science, University of Wisconsin-Madison

advisor: Prof. Sharon Li

Sep. 2024 ~ PresentM.S. in Artificial Intelligence, University of Seoul

advisor: Prof. Kyungwoo Song and Prof. Jiyoung Jung

Mar. 2022 - Aug. 2024B.S. in Statistics, University of Seoul

Mar. 2016 - Feb. 2022

Experience

- Research Scientist Intern, Meta Superintelligence Labs

Mentor: Julian Katz-Samuels, May. 2026 ~ current - Visiting Student-Subcontractor, Argonne National Laboratory

Mentor: Tanwi Mallick, Feb. 2026 ~ current - Research Intern, NAVER AI Lab

Mentor: Dongyoon Han and Sangdoo Yun, Apr. 2023 ~ Aug. 2024- DaWin: Training-free Dynamic Weight Interpolation for Robust Adaptation, ICLR 2025

- Visiting Scholar, Carnegie Mellon University

Mentor: Zhi-Qi Cheng, Sep. 2023 ~ Feb. 2024- Towards Calibrated Robust Fine-Tuning of Vision-Language Model, NeurIPS 2024

- Mitigating the Linguistic Gap with Phonemic Representations for Robust Cross-lingual Transfer, EMNLP 2024 Workshop

Talks

- Jan. 2026, MLAI Lab @ Yonsei University, “On the Dynamic Reliability of Adaptive Foundation Models”

- Jun. 2025, ResearchTrend.AI, “Visual Instruction Bottleneck Tuning”

Academic Services

- Conference Reviewer:

- NeurIPS 2025, 2024

- ICML 2026, 2025 (Top Reviewer)

- ICLR 2026, 2025

- AAAI 2025

- AISTATS 2026

- CVPR 2024

- Conference Volunteer: NeurIPS’24, KDD’22

- Workshop Committee

- Journal Reviewer: TMLR, Neural Network